Automotive In-Vehicle Vision Recognition POC

In-vehicle vision recognition POC using offline multimodal LLM, validating edge deployment feasibility

Tech Stack

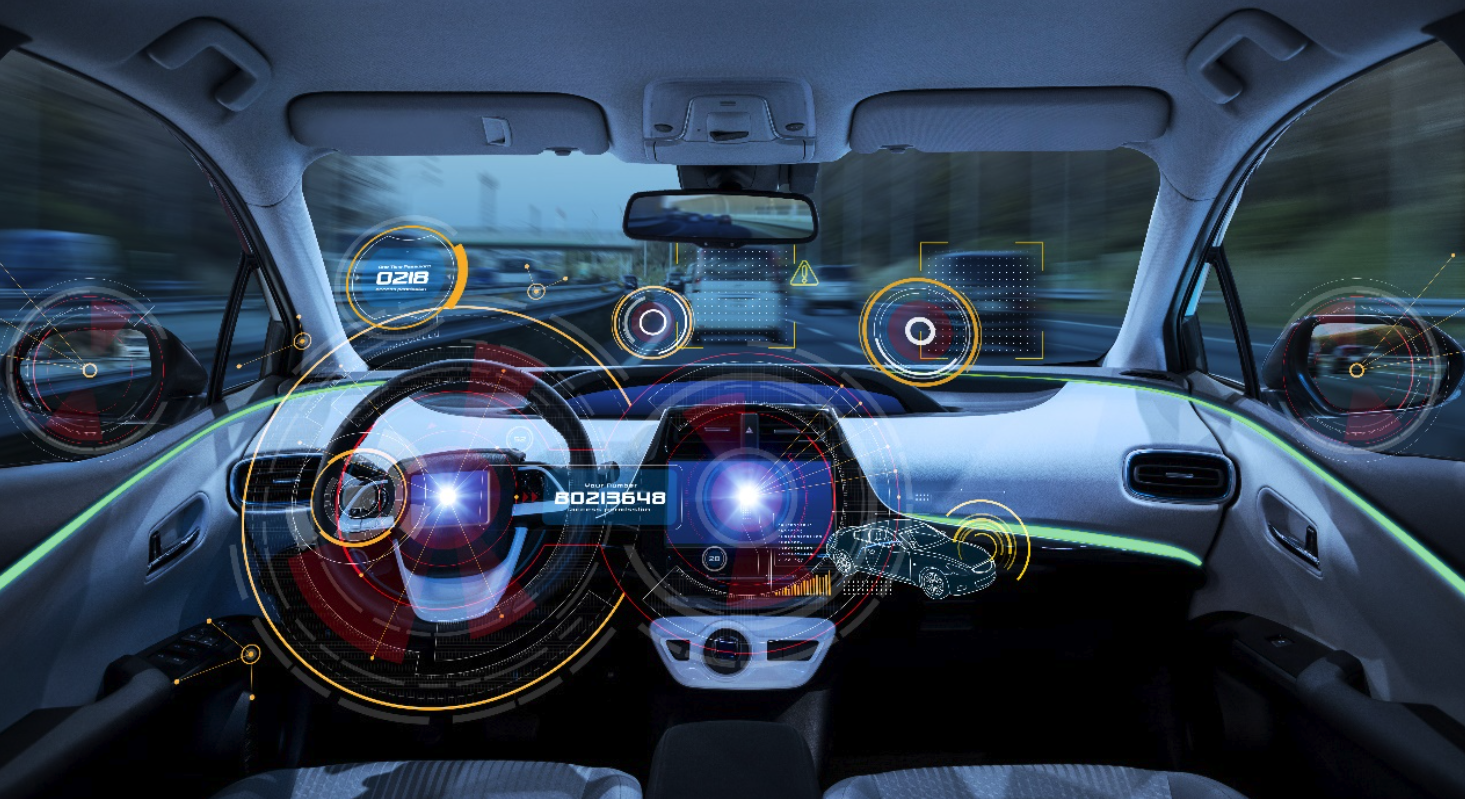

Automotive In-Vehicle Vision Recognition POC

📋 Project Overview

We collaborated with a major automotive manufacturer's research lab on a proof-of-concept project to validate the feasibility of in-vehicle camera-based intelligent recognition. The goal was to enable smart alerts for scenarios such as forgotten items or children left in the back seat. Through this experimental project, we validated that our locally-deployed multimodal vision model could accurately recognize various objects across different environments with low latency, and confirmed its capability for single-chip deployment—providing valuable technical validation for the manufacturer's future product roadmap.

🚀 Key Features

Core Implementation

- Offline Multimodal LLM Deployment: Local deployment using Ollama + Llama 3.2-Vision model, ensuring data privacy and eliminating network dependency

- Real-time Video Frame Analysis: Capture and analyze video frames on-demand, identifying objects, activities, and environmental context

- Low-latency Recognition: Optimized pipeline achieving fast inference suitable for automotive embedded scenarios

- Multi-environment Validation: Tested recognition accuracy under various lighting conditions and camera angles

Technical Highlights

- Edge-ready Architecture: Validated deployment capability on local hardware without cloud connectivity

- OpenCV Video Processing: Efficient video capture, frame extraction, and image preprocessing pipeline

- Flexible Recognition Prompts: Customizable prompt engineering for different detection scenarios

- Desktop Validation UI: Built interactive demo application for rapid testing and validation

💻 Project Detail

Our POC system demonstrates the technical feasibility of in-vehicle intelligent monitoring through locally-deployed multimodal AI:

-

Video Processing Pipeline:

- OpenCV-based video capture supporting multiple formats (mp4, avi, mov, etc.)

- Real-time frame extraction with configurable resolution and quality settings

- Frame preprocessing including resizing and format conversion for optimal model input

-

Local Vision Model Integration:

- Integrated Llama 3.2-Vision model via Ollama local inference server

- Designed flexible API wrapper supporting both file and byte-stream image inputs

- Implemented customizable prompts for detecting specific objects and scenarios

-

Recognition Capabilities Validated:

- Object detection (bags, toys, personal items, child seats, etc.)

- Activity recognition (movement, presence detection)

- Environmental context analysis (lighting conditions, scene description)

- Multi-object scenario handling

-

Demo Application Development:

- Built Tkinter-based desktop UI for live video playback and frame-by-frame analysis

- Real-time recognition result display with detailed AI-generated descriptions

- System health monitoring for model availability and connection status

📊 Project Impact

Technical Feasibility Validation:

- Successfully demonstrated that locally-deployed multimodal vision models can achieve sufficient accuracy for in-vehicle object detection

- Validated low-latency performance suitable for real-time automotive applications

- Confirmed single-machine deployment capability, supporting future embedded chip integration

Research Value Delivered:

- Provided the automotive manufacturer's research team with concrete evidence for their product planning decisions

- Established baseline performance metrics for recognition accuracy across different environmental conditions

- Created reusable codebase and architecture patterns for future development phases

🛠️ Technology Stack

AI & Vision:

- Llama 3.2-Vision (Multimodal LLM)

- Ollama (Local Model Inference)

- OpenCV (Computer Vision)

Local Deployment:

- Edge AI Architecture

- Offline Inference

- Single-machine Deployment

Video Processing:

- Real-time Frame Extraction

- Multi-format Support

- Image Preprocessing

Development:

- Python

- Tkinter (Demo UI)

- Pillow (Image Processing)

This project demonstrates the practical application of locally-deployed multimodal AI for automotive in-vehicle monitoring scenarios, validating the technical foundation for intelligent vehicle safety features.